In their Big Ideas 2026 series, a16z made a claim that should be obvious but apparently still needs saying: enterprise AI's bottleneck is not intelligence. It is entropy.

The next billion-dollar companies, they argue, will not be built by teams chasing smarter models. They will be built by teams that make AI survive the chaos of real-world operations. Reliability. Consistency. Graceful degradation. The boring stuff that separates a demo from a product.

We have been living this lesson at deetech for months. It is the single most important thing we have learned.

The 97-to-50 problem

Here is a pattern we see constantly in AI. A team publishes a model that achieves 97% accuracy on a benchmark dataset. The paper gets cited. The demo gets retweeted. Investors get excited. Then someone deploys it on real data and the number drops to 50-65%.

This is not a bug. It is a fundamental gap between lab conditions and production conditions, and it kills companies.

In deepfake detection, the gap is especially brutal. Academic benchmarks use high-resolution, uncompressed images generated by a known set of models. Production data -- the actual photos submitted with insurance claims -- has been compressed through messaging apps, screenshotted, forwarded via email, photographed in poor lighting at bad angles, and uploaded through web portals that compress again on ingest. By the time an image reaches the claims system, it has been through multiple rounds of quality degradation that the benchmark never simulated.

A model trained on clean data and evaluated on clean data will report 97% accuracy and mean absolutely nothing in production.

Why this keeps happening

The incentive structure in AI research optimises for benchmark performance. Papers get accepted when they beat the state of the art on a standard dataset. Funding follows citations. Hiring follows publications. The entire pipeline from PhD to product is built around a metric that does not measure what matters.

Production environments introduce noise that benchmarks deliberately exclude. Compression artefacts. Format conversions. Metadata stripping. Device-specific quirks. User behaviour that no dataset designer anticipated. The model that was intelligent enough to spot subtle pixel-level anomalies in a lab image is completely lost when those same anomalies have been destroyed by JPEG compression.

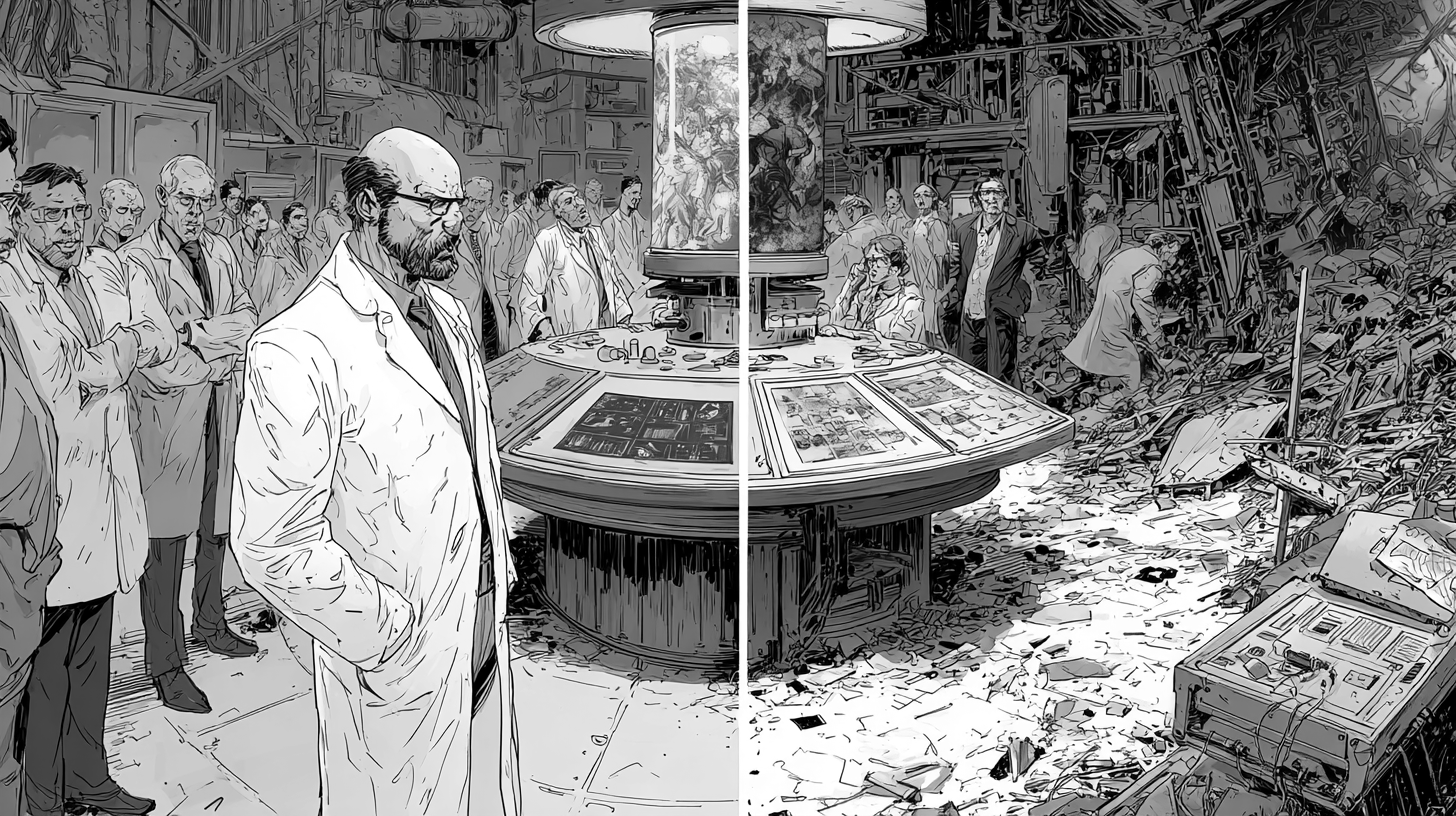

This is what a16z means by entropy. Real-world systems are messy. The signal degrades. The distribution shifts. The edge cases multiply. And the model that looked brilliant on a leaderboard becomes a liability.

Reliability is a design choice, not a tuning exercise

You cannot take a model optimised for clean benchmarks and "tune" it to handle production chaos. The architecture has to be designed for degraded inputs from day one.

At deetech, this means training on compressed images, not clean ones. It means evaluating on smartphone photos taken in dimly lit car parks, not studio-quality test sets. It means testing against generators the model has never seen, because in production, the generator is always unknown. It means building the entire pipeline -- from ingestion to analysis to reporting -- around the assumption that the input will be worse than expected.

This is not glamorous work. Nobody publishes a paper titled "We Made Our Model Work On Bad JPEGs." But it is the difference between a product that survives its first customer and one that does not.

The startup graveyard is full of smart models

Look at the AI startups that have struggled despite strong technology. Almost universally, the pattern is the same: impressive demo, strong benchmark results, venture funding, then a slow realisation that the model does not work in the customer's environment. The sales cycle stalls because the pilot fails. The pilot fails because the data is messier than the training set. The team scrambles to patch edge cases. The patches create new edge cases. The customer loses patience.

Meanwhile, the boring competitor -- the one that built for production conditions from the start, the one with lower benchmark numbers but consistent real-world performance -- quietly closes deals and compounds.

a16z is not saying intelligence does not matter. Of course it does. But intelligence without reliability is a science project. Reliability without peak intelligence is a product. The market pays for products.

What this means for builders

If you are building an AI product, here is the test that matters: take your model, feed it the worst data your customer will realistically send you, and measure the output. Not average-case performance on a curated test set. Worst-case performance on data that has been through the wringer.

If the number holds, you have a product. If it collapses, you have a demo. The gap between those two things is where most AI startups die.

At deetech, we report 98%+ accuracy on real claims media. That number is measured on compressed, degraded, real-world images -- not benchmark data. It includes 95%+ accuracy on content from generators the model has never seen. Those are production numbers, not lab numbers, and the distinction matters more than any architectural innovation we have made.

a16z is right. The next wave of AI winners will not be the smartest. They will be the most reliable. Build accordingly.